2024 ESIF Economics and AI+ML Meeting: August, 2024

LABOR-LLM: Language-Based Occupational Representations with Large Language Models

Susan Athey, Herman Brunborg, Tianyu Du, Ayush Kanodia, Keyon Vafa

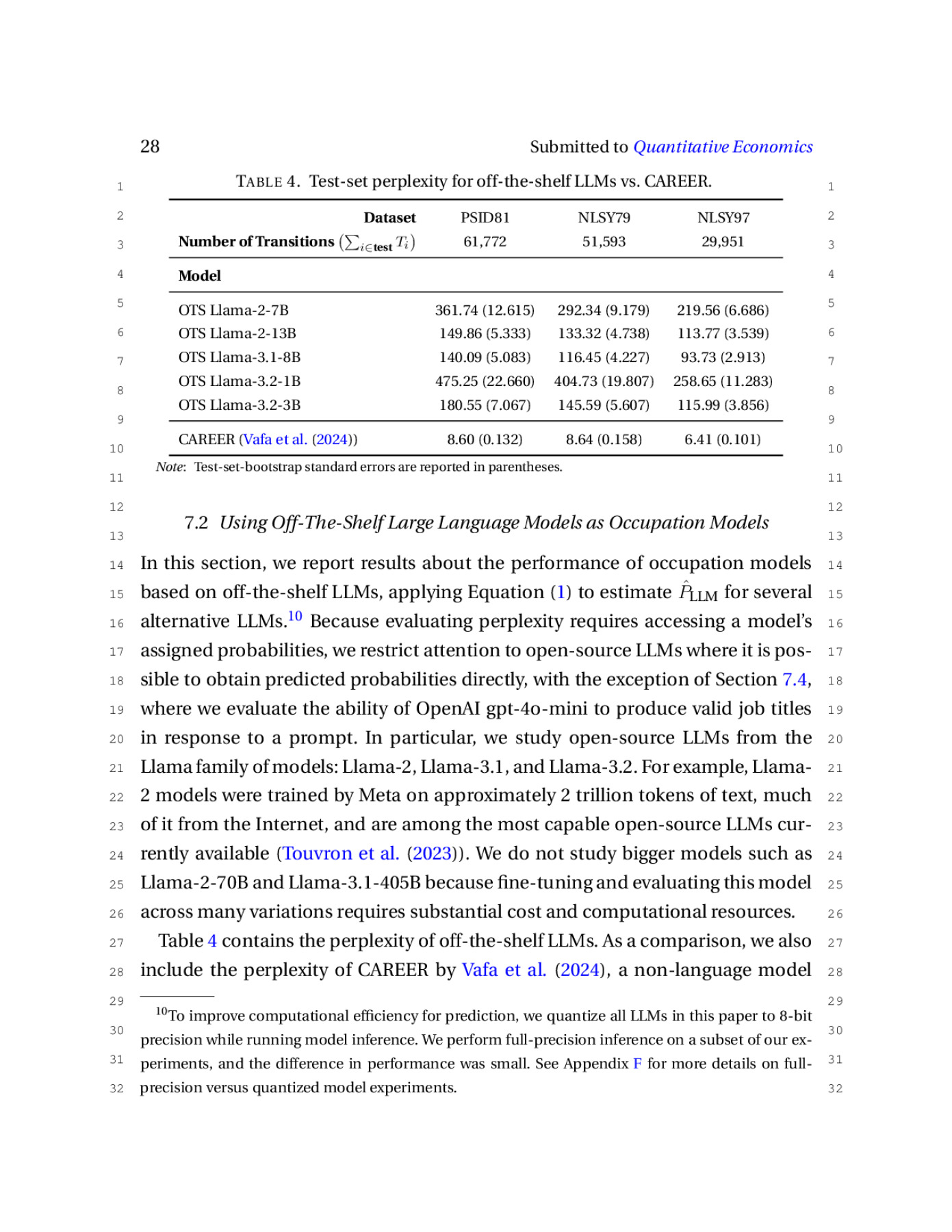

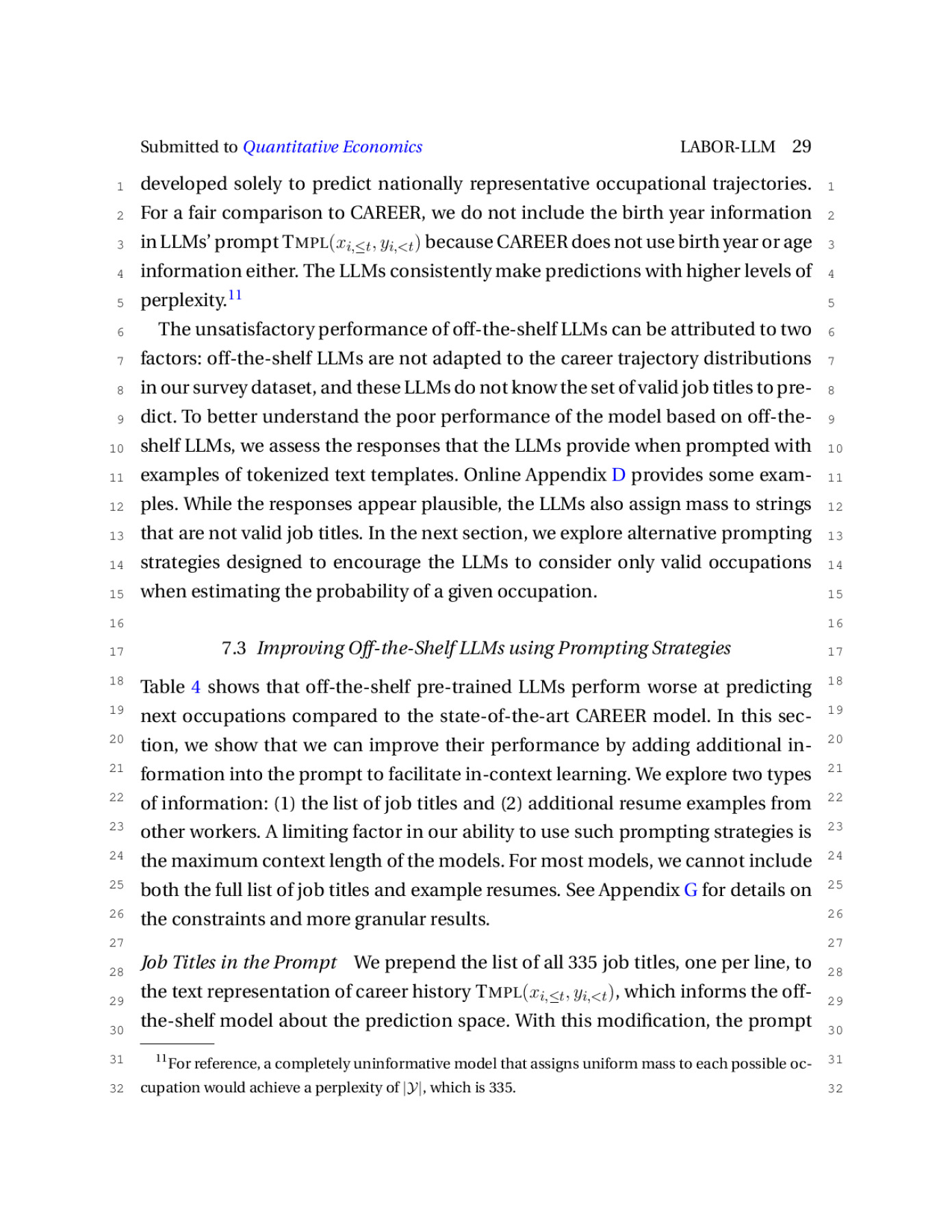

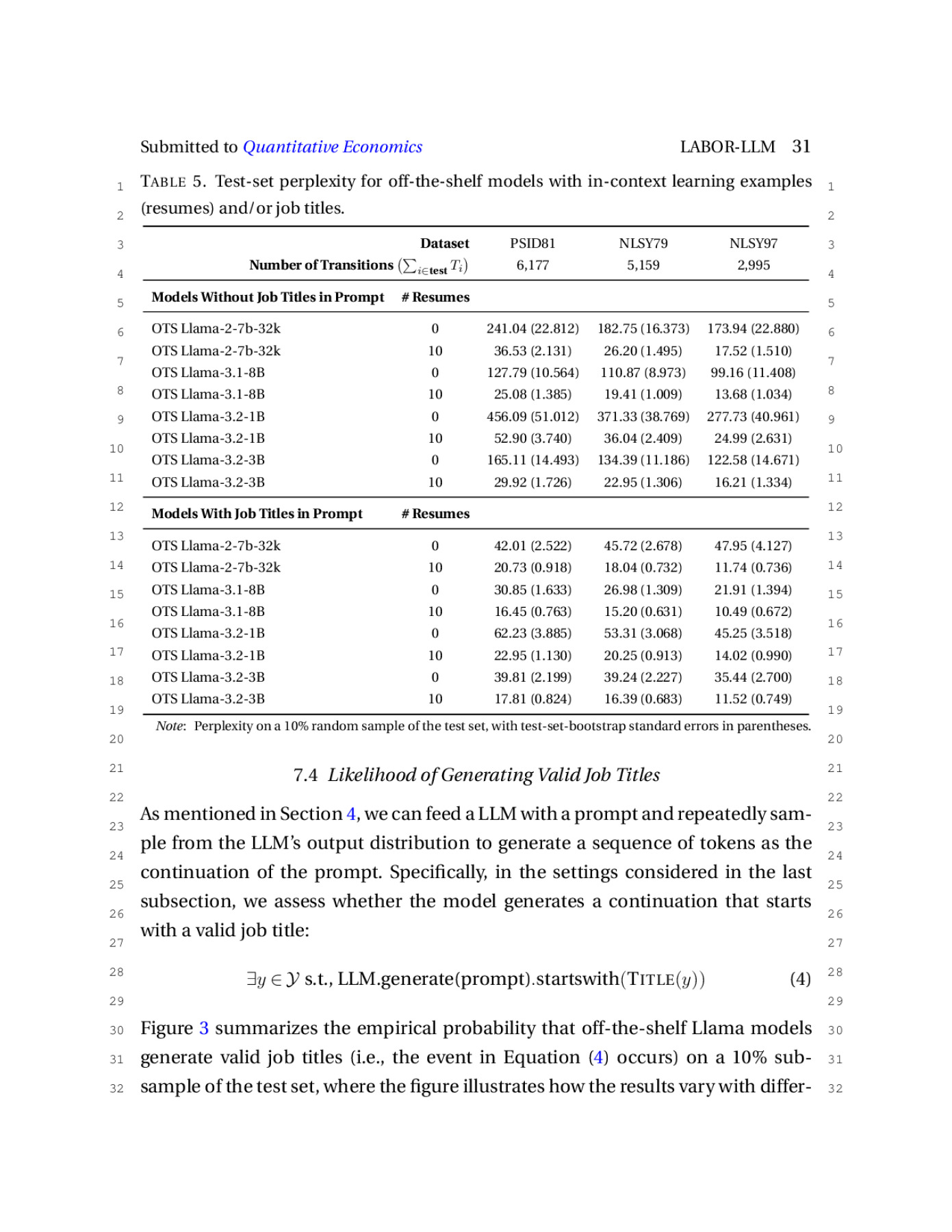

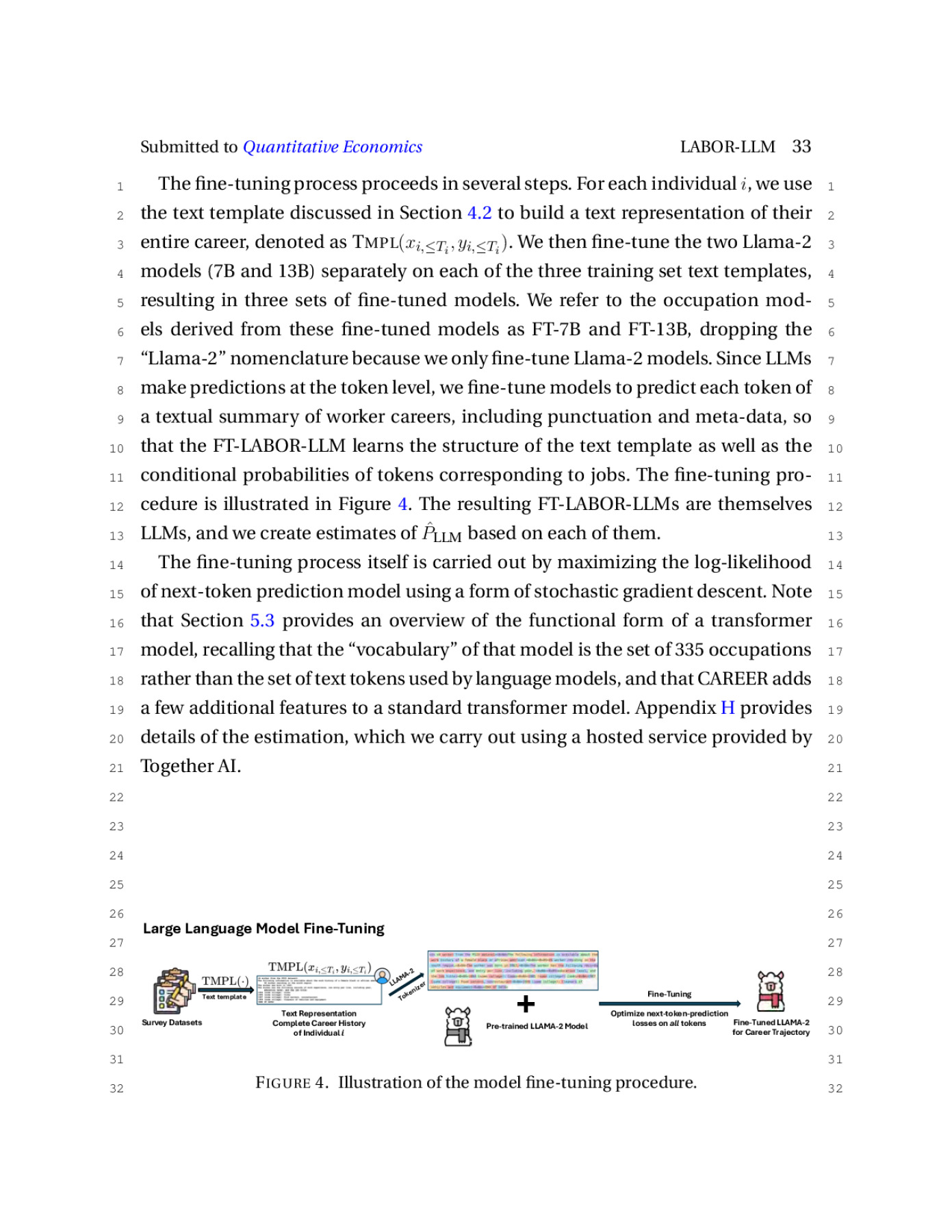

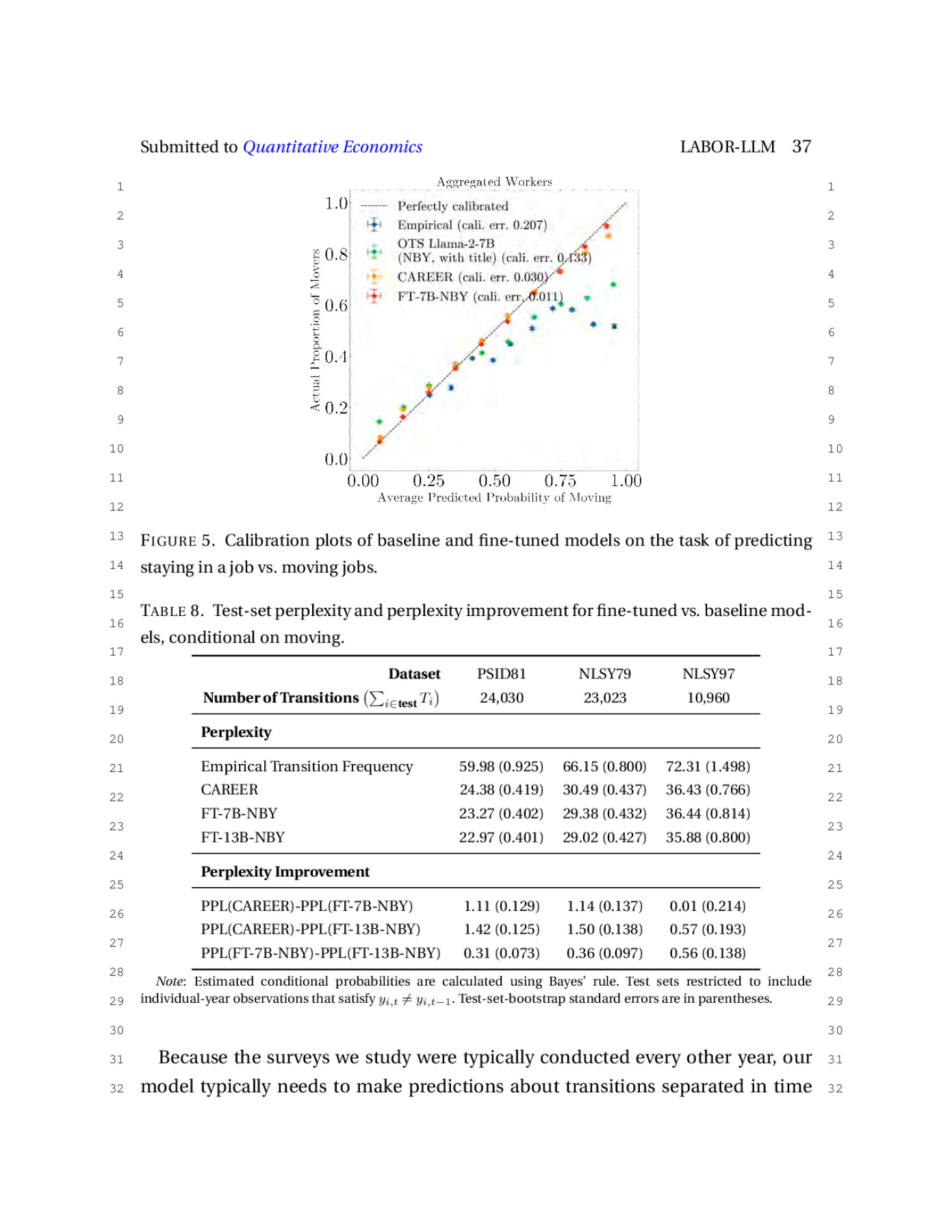

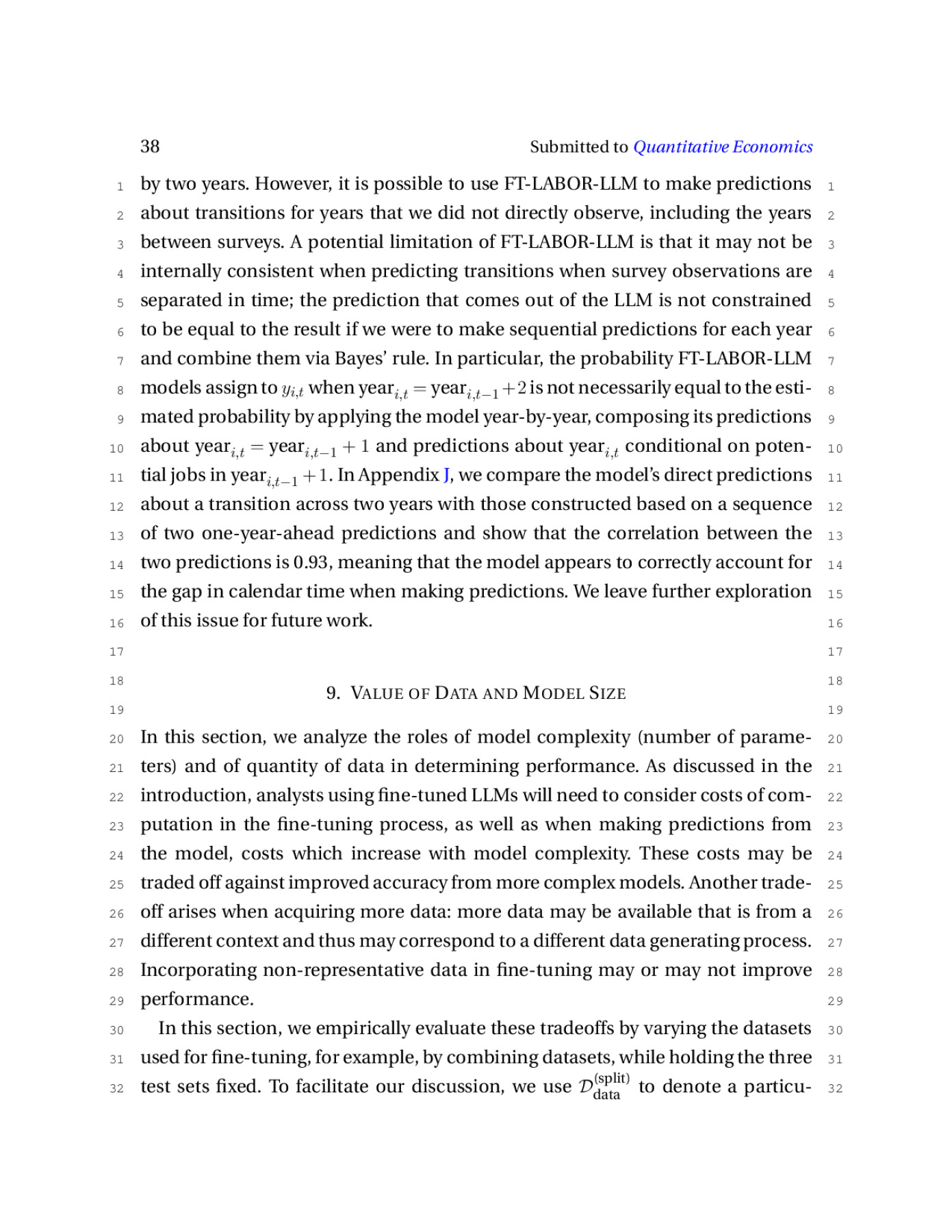

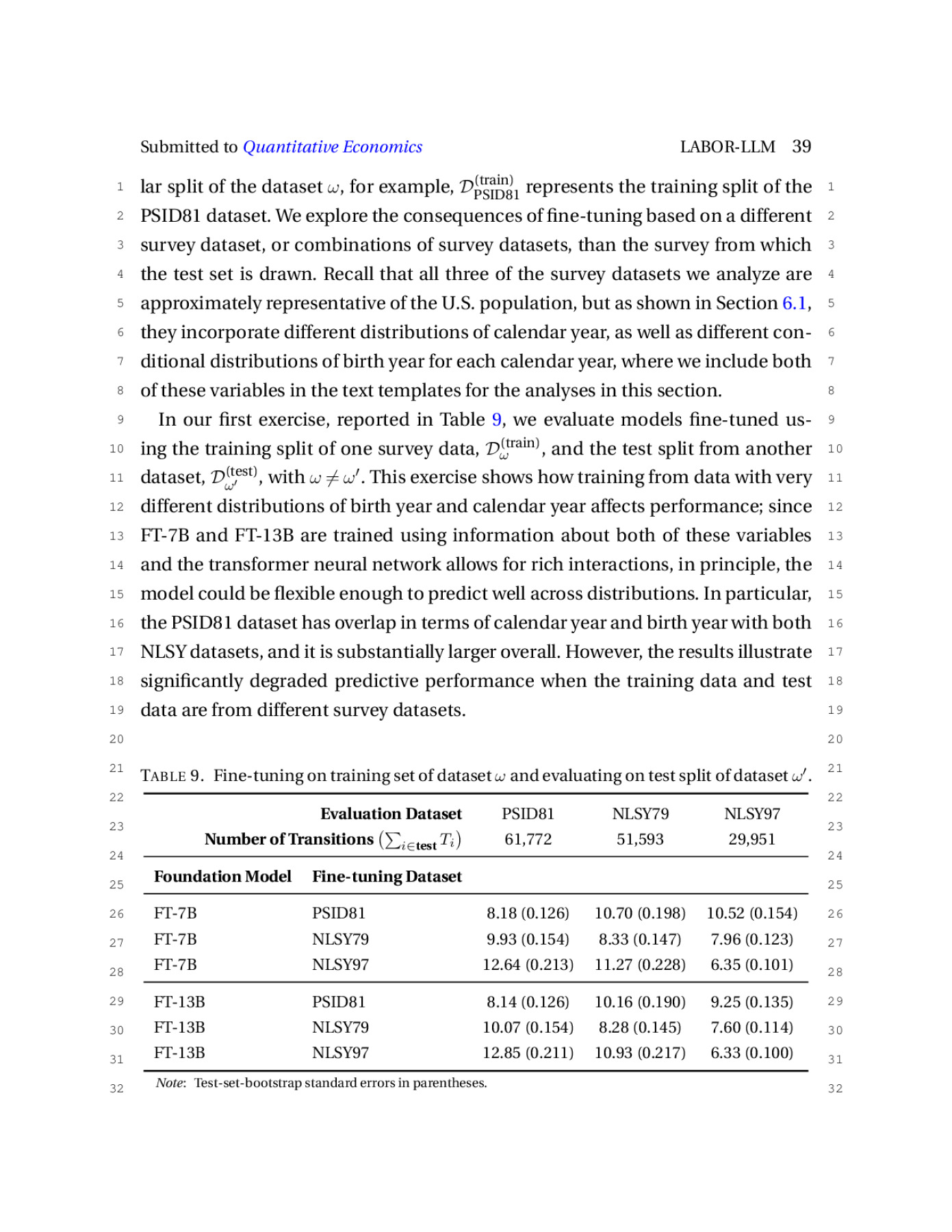

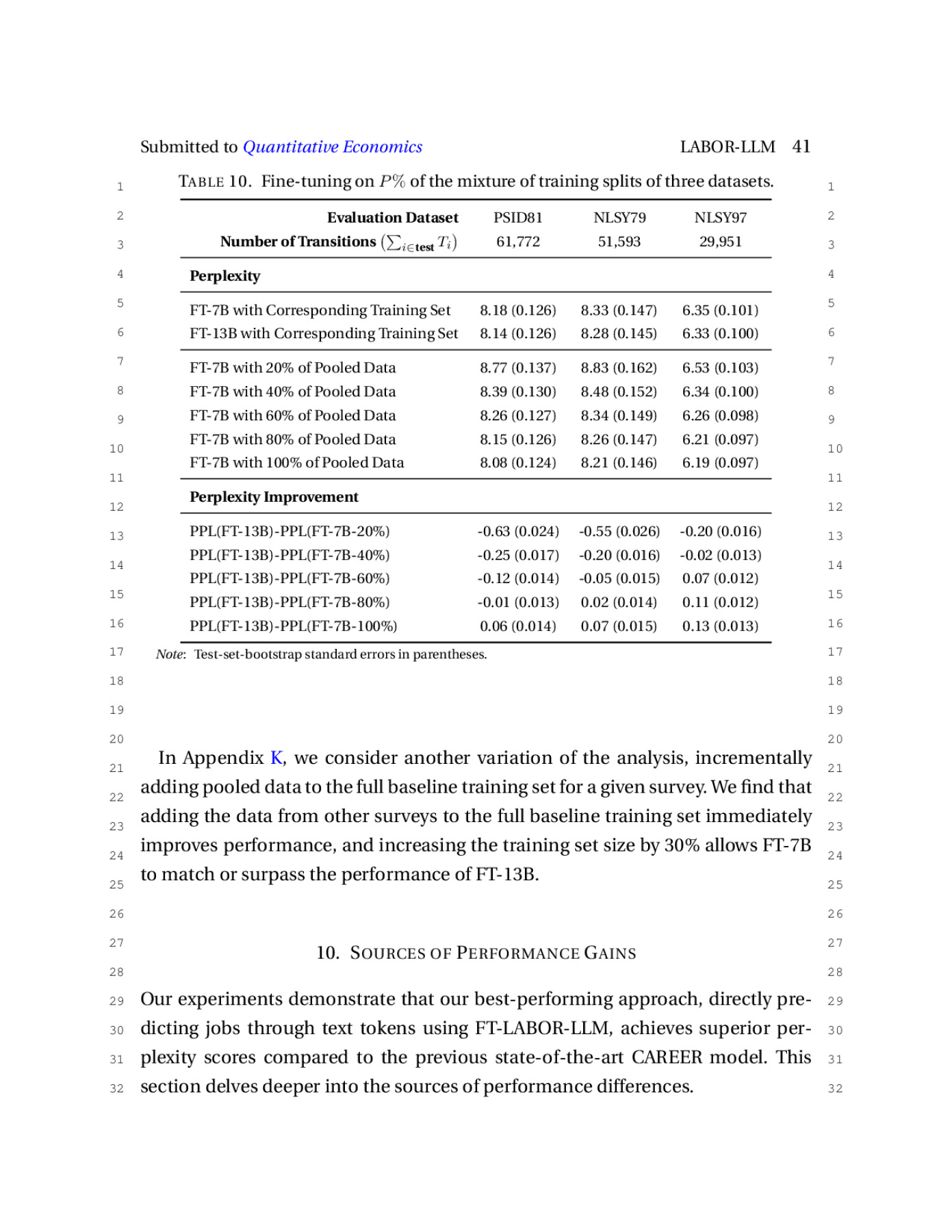

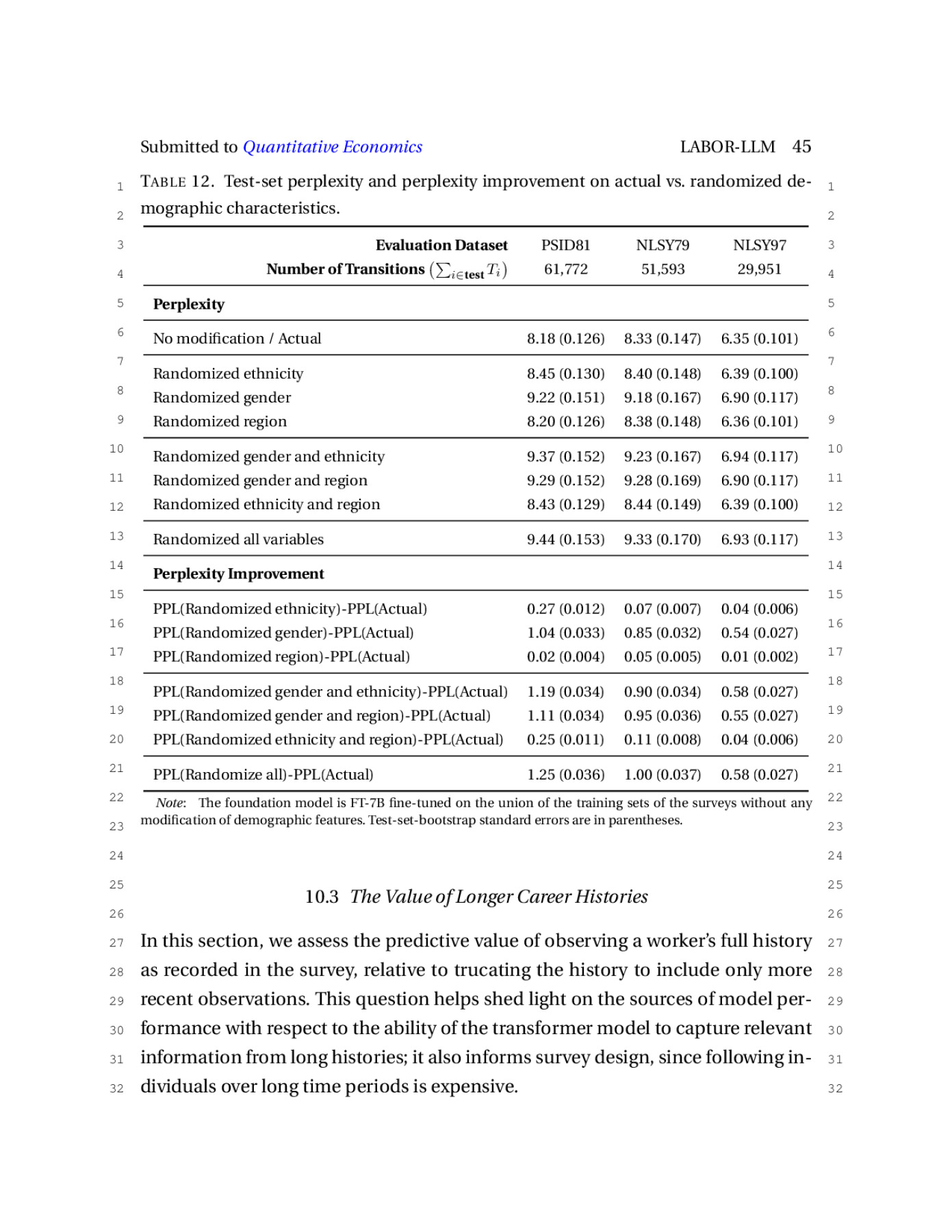

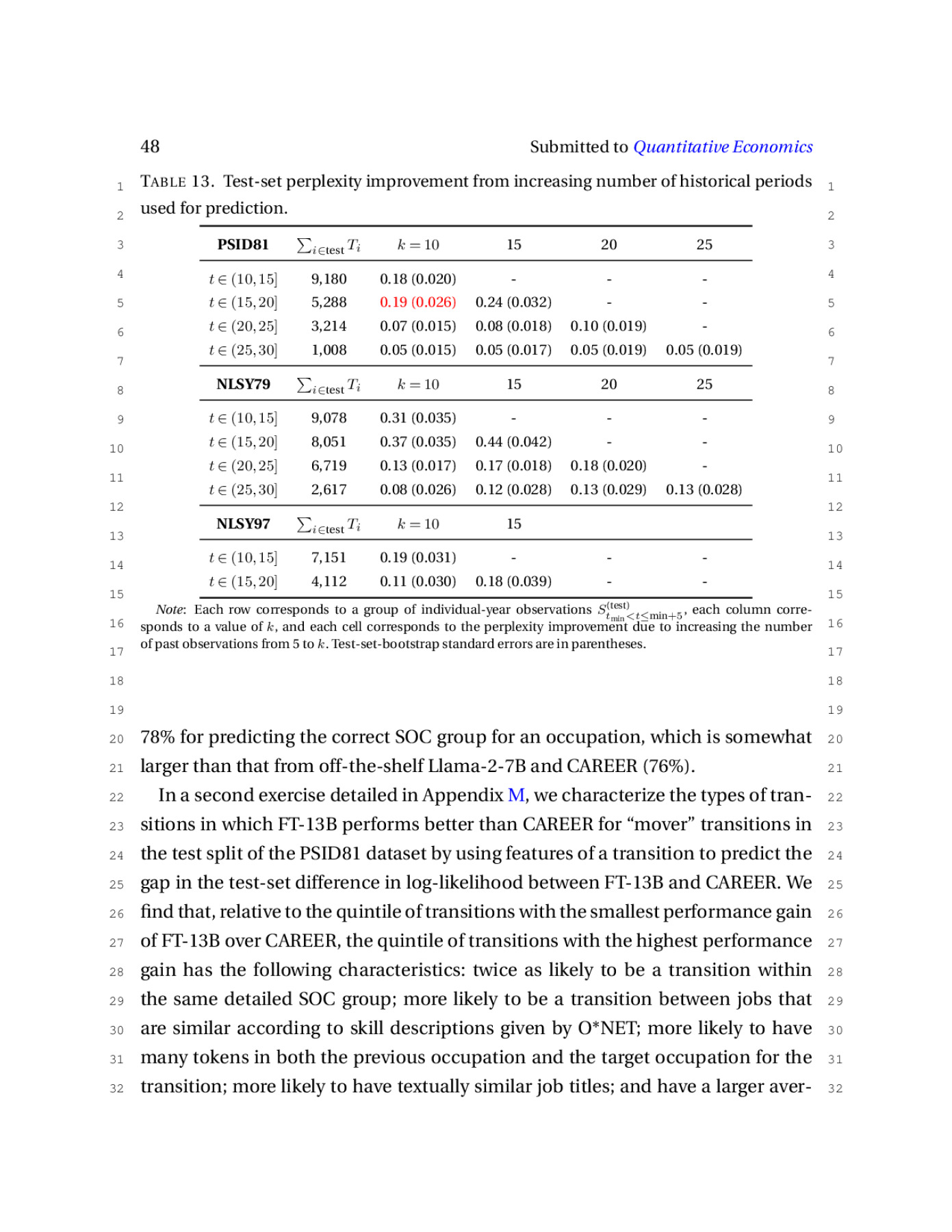

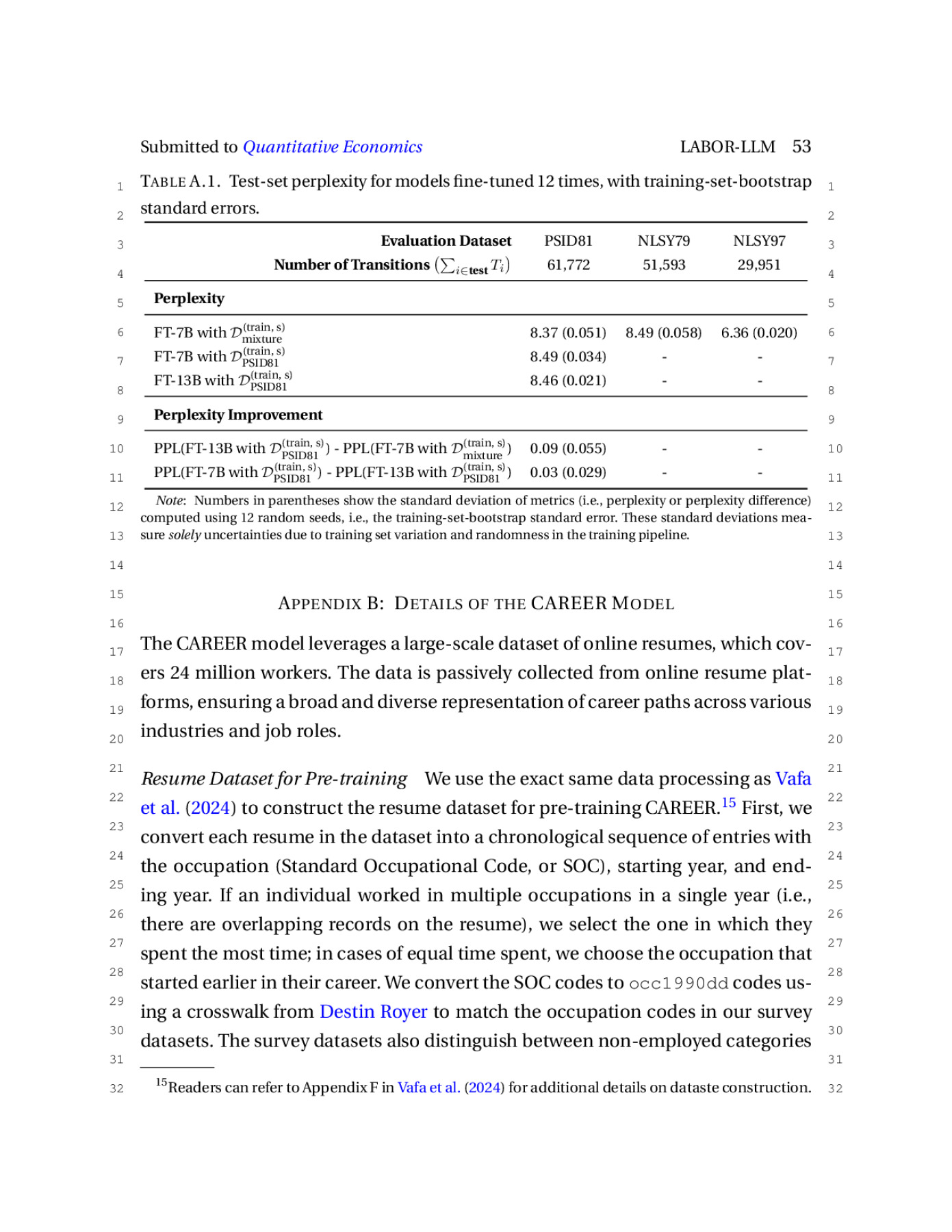

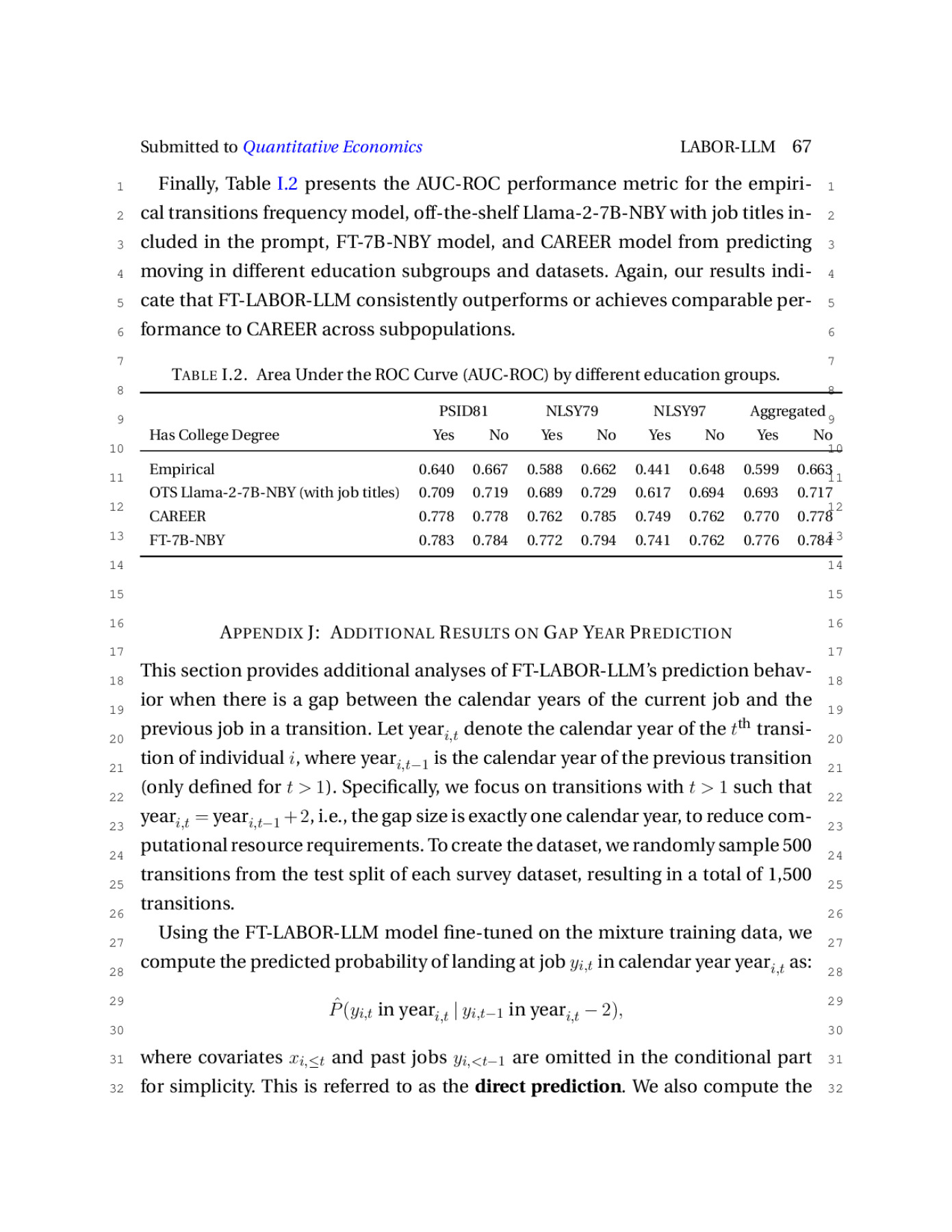

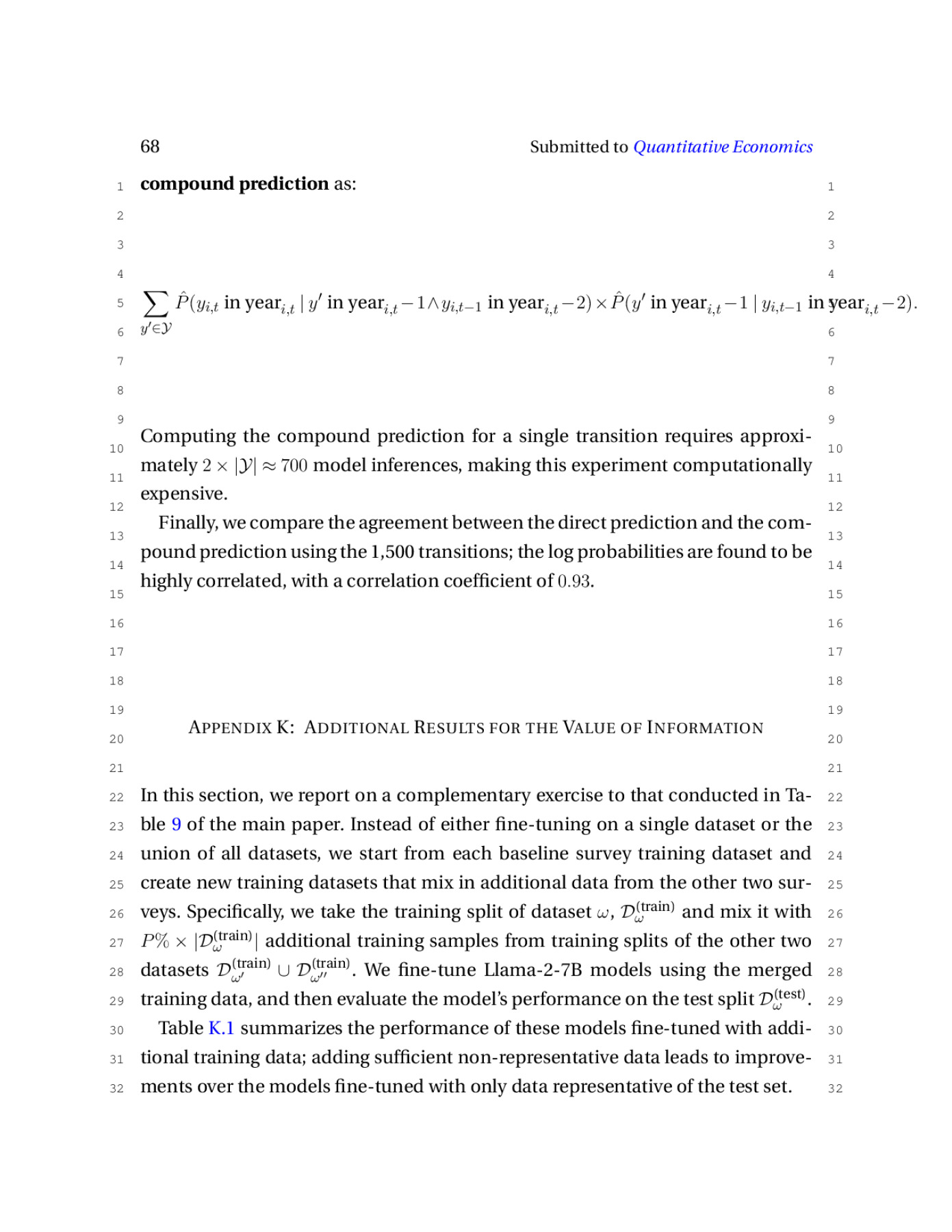

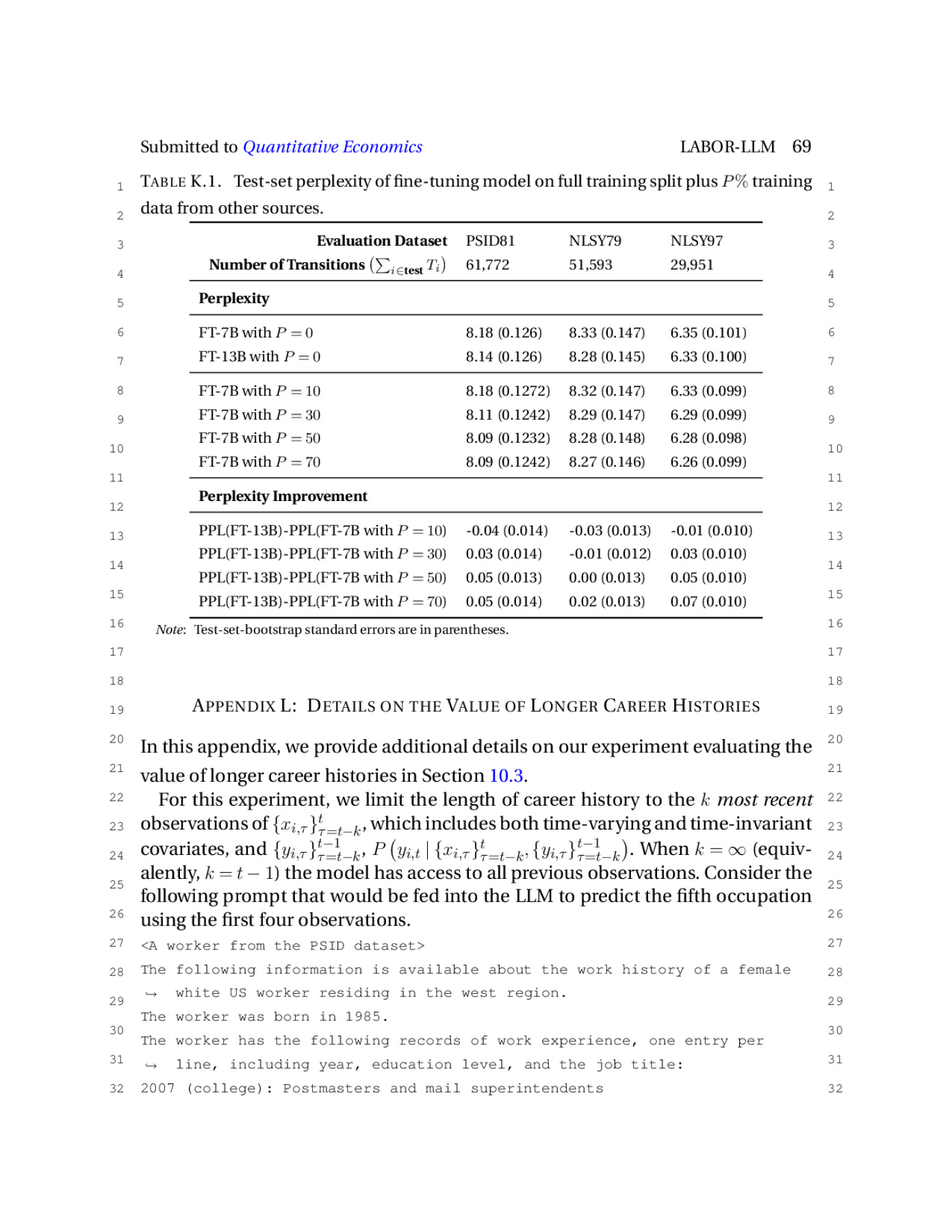

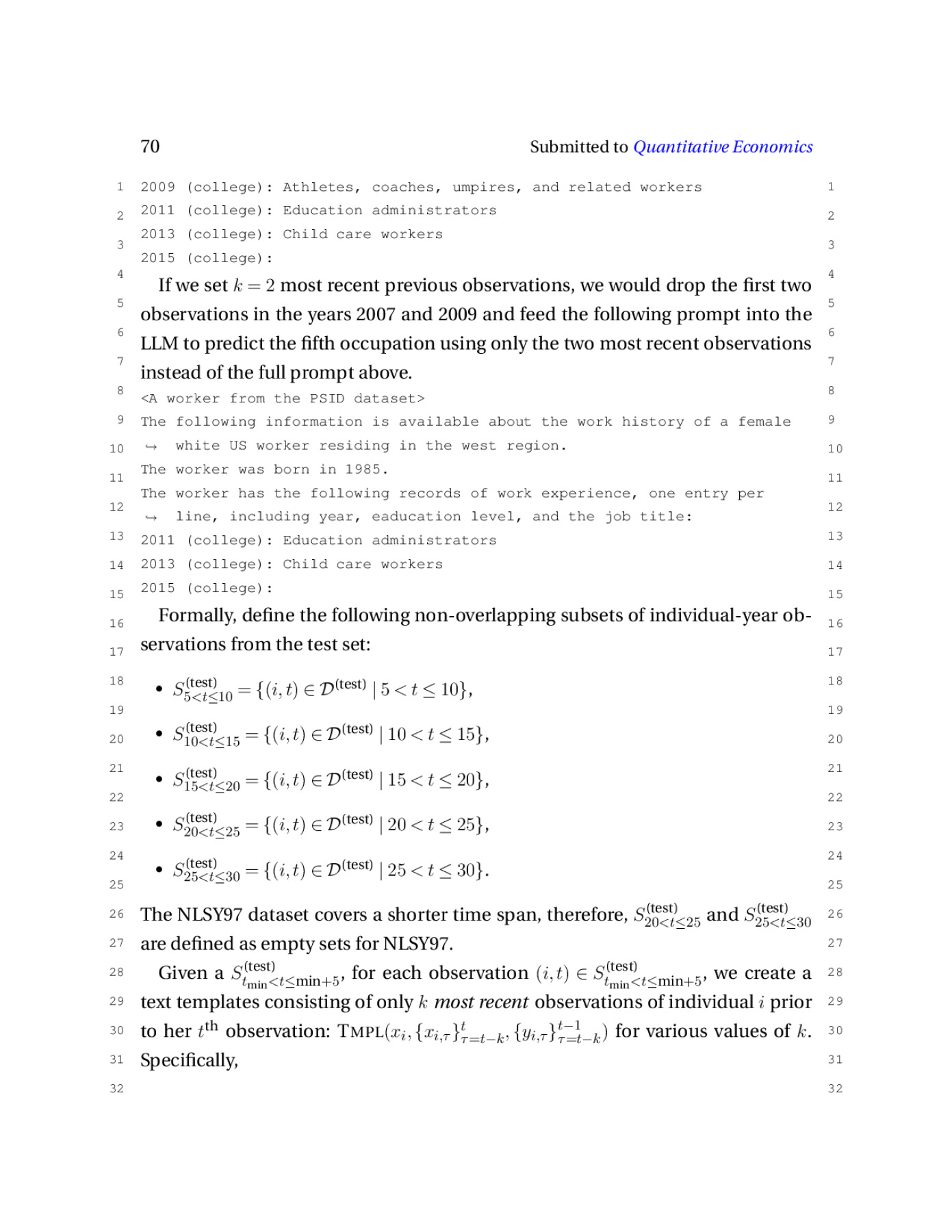

Vafa et al (2024) introduced a transformer-based econometric model, CAREER, that predicts a worker's next job as a function of career history (an ``occupation model''). CAREER was initially estimated (``pre-trained'') using a large, unrepresentative resume dataset, which served as a ``foundation model,'' and parameter estimation was continued (``fine-tuned'') using data from a representative survey. CAREER had better predictive performance than benchmarks. This paper considers an alternative where the resume-based foundation model is replaced by a large language model (LLM). We convert tabular data from the survey into text files that resemble resumes and fine-tune the LLMs using these text files with the objective to predict the next token (word). The resulting fine-tuned LLM is used as an input to an occupation model. Its predictive performance surpasses all prior models. We demonstrate the value of fine-tuning and further show that by adding more career data from a different population, fine-tuning smaller LLMs surpasses the performance of fine-tuning larger models.

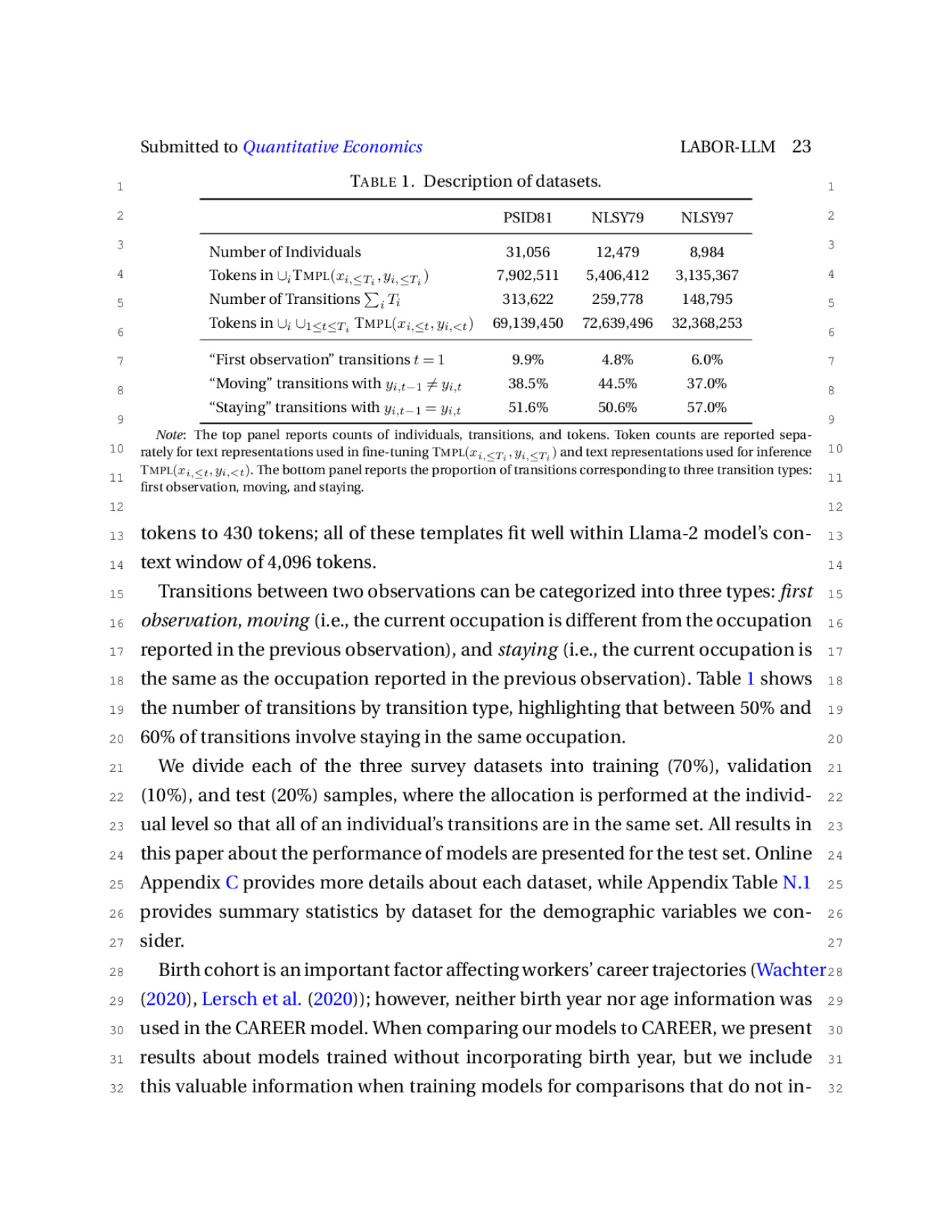

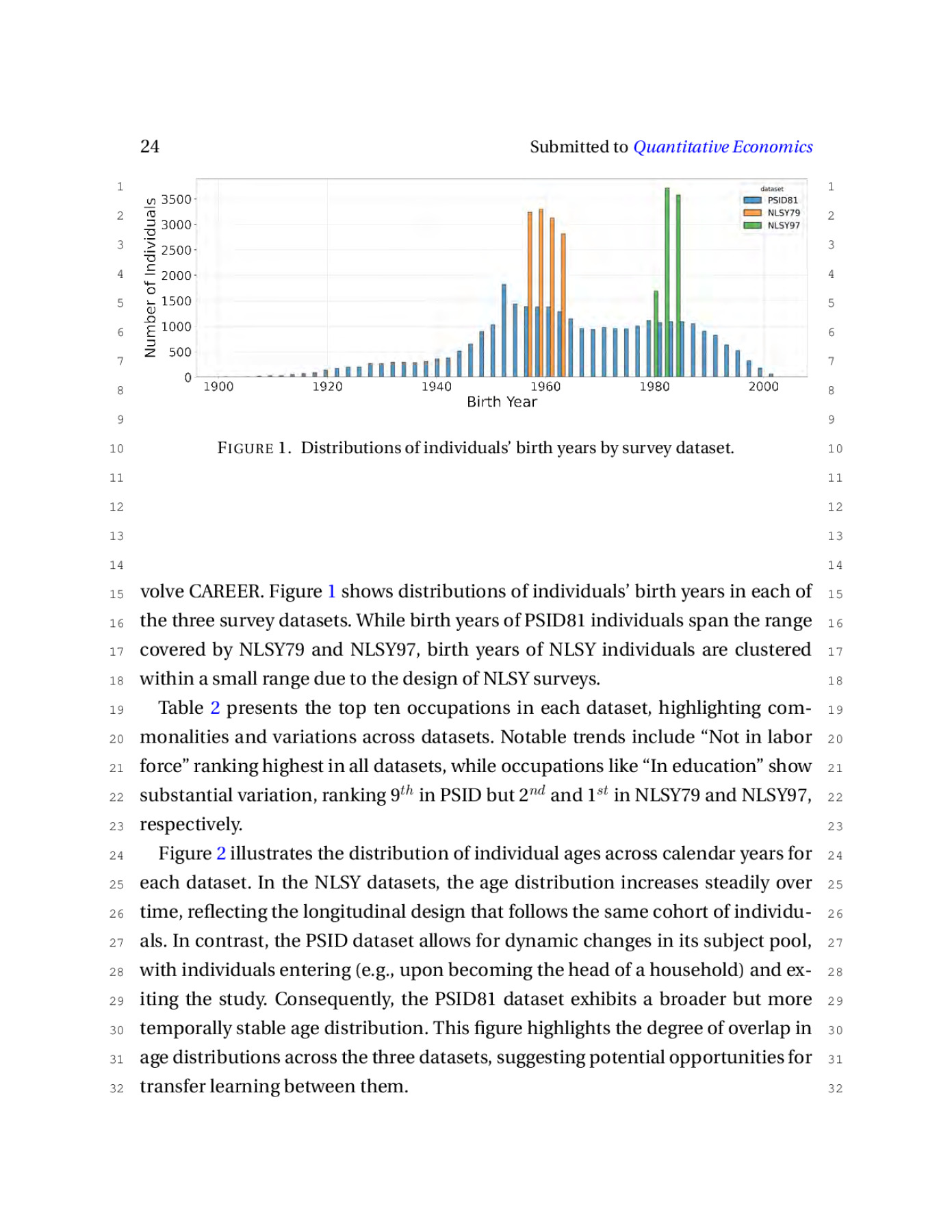

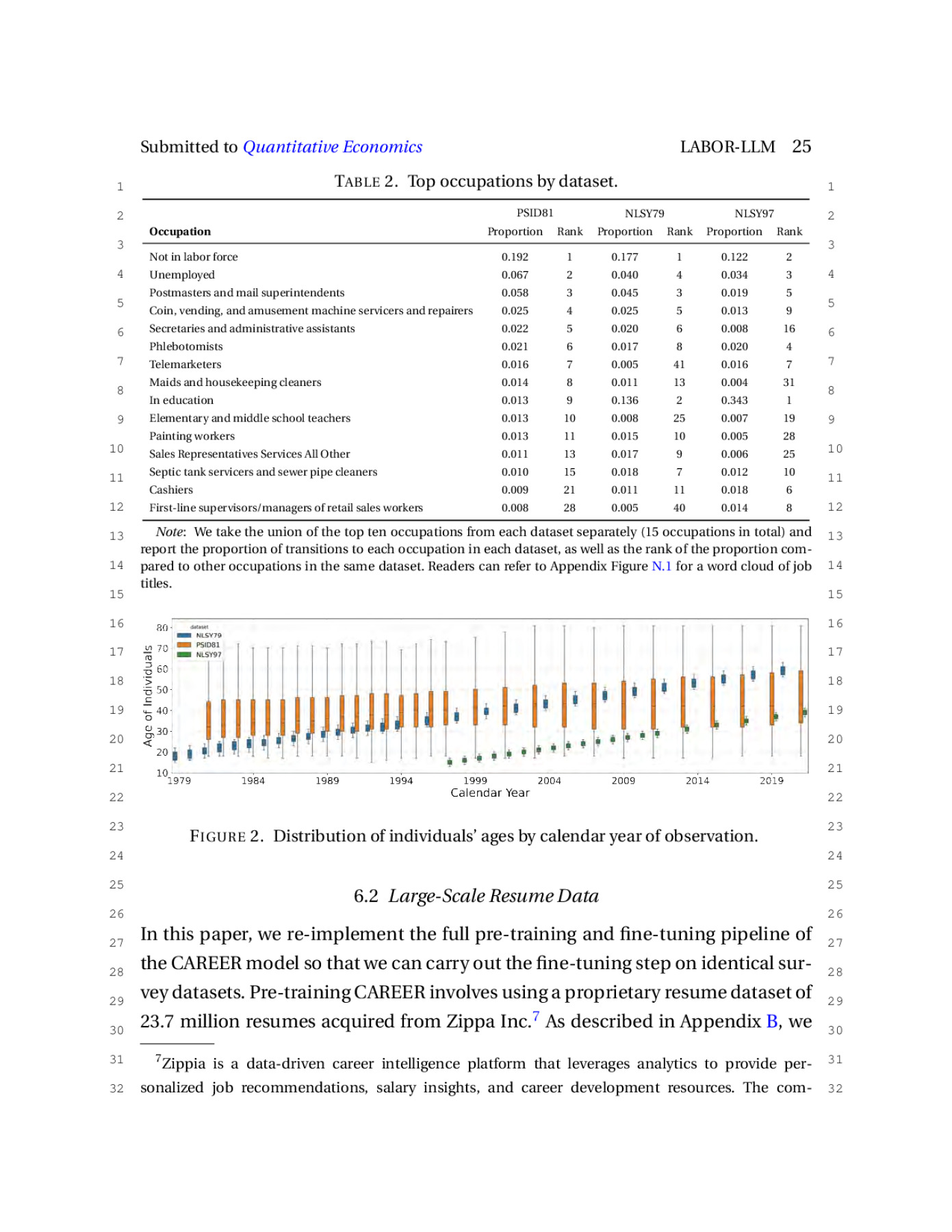

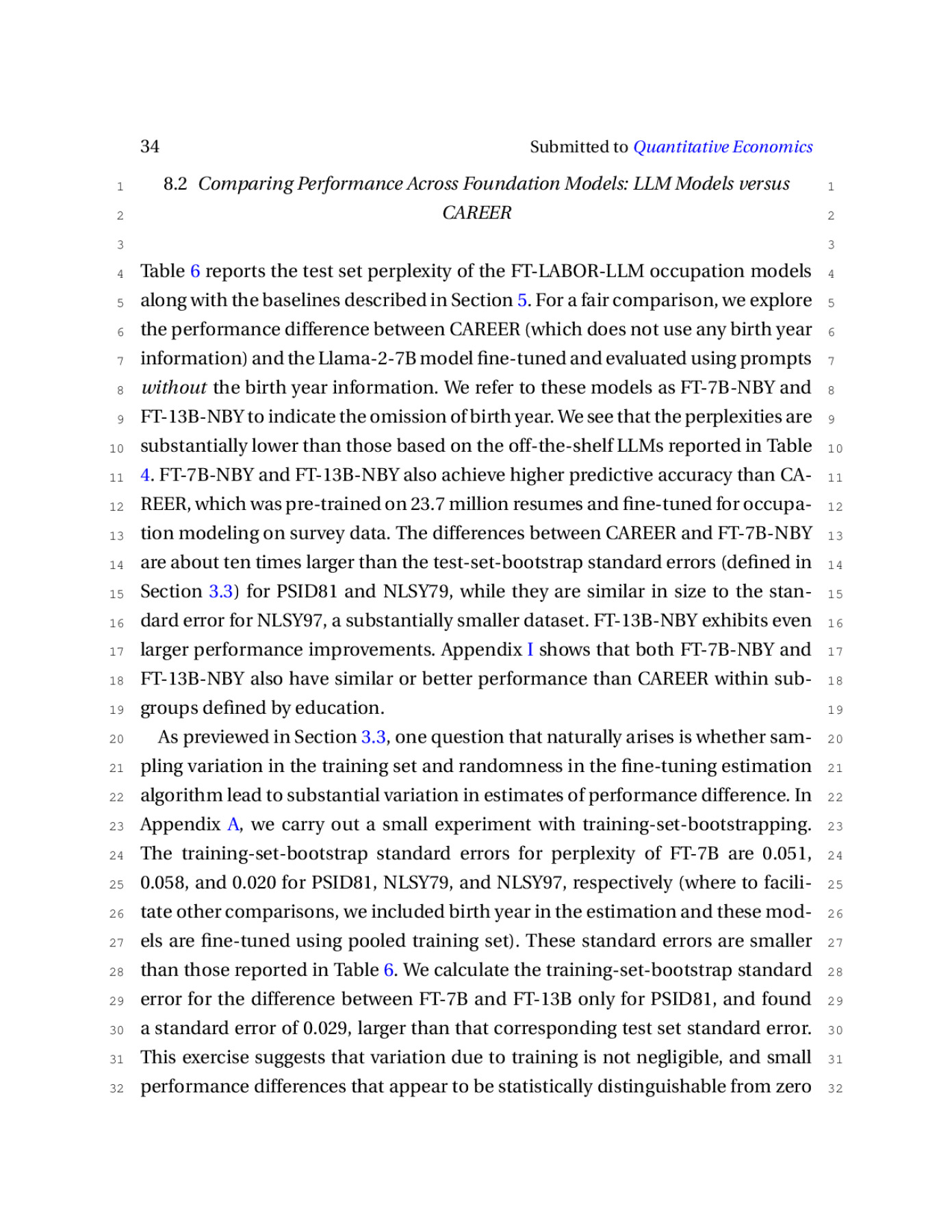

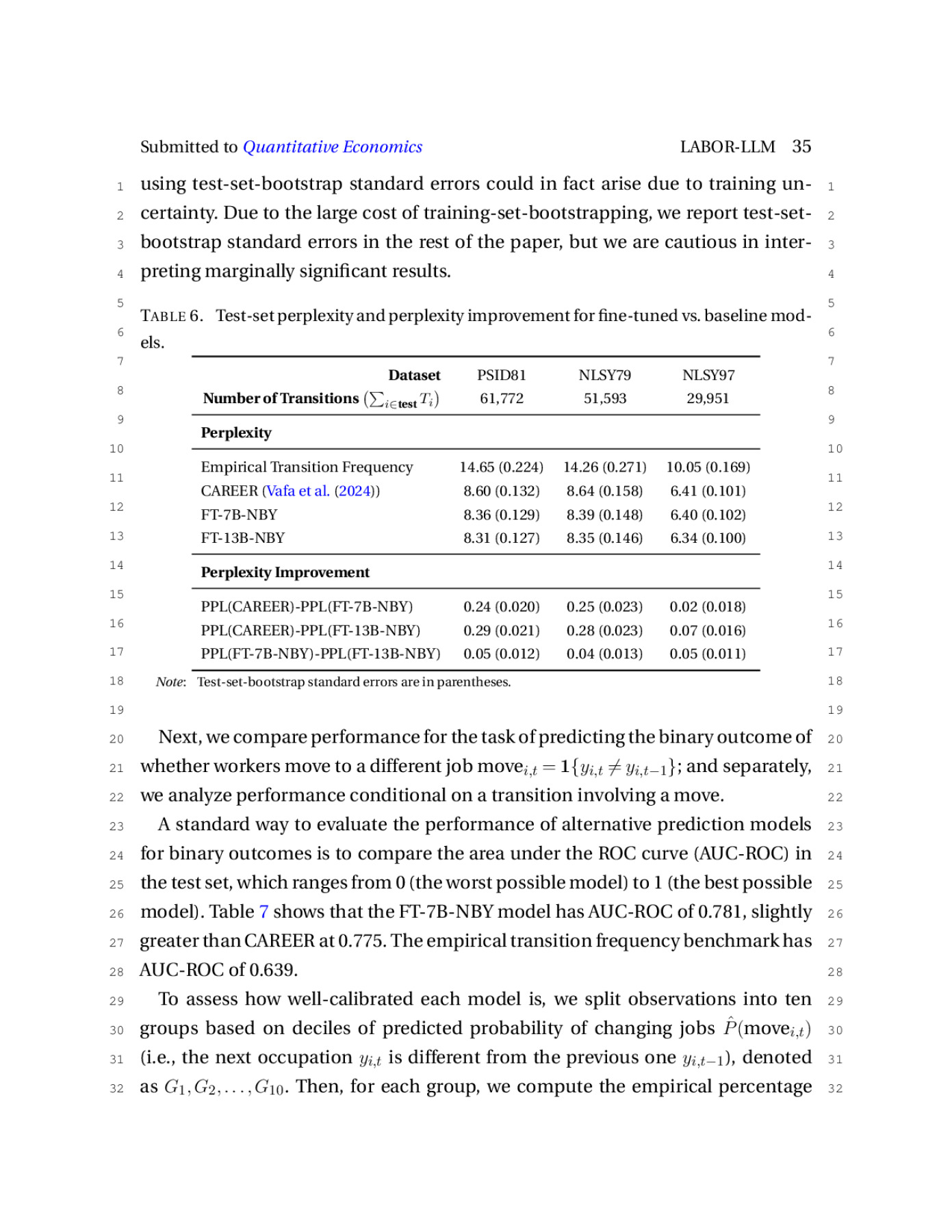

Preview